By: Juhani Kivimäki, Jakub Białek, Wojtek Kuberski, Jukka K. Nurminen

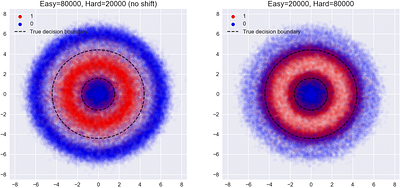

Model monitoring is a critical component of the machine learning lifecycle, safeguarding against undetected drops in the model's performance after deployment. Traditionally, performance monitoring has required access to ground truth labels, which are not always readily available. This can result in unacceptable latency or render performance monitoring altogether impossible. Recently, methods designed to estimate the accuracy of classifier mod... more

Model monitoring is a critical component of the machine learning lifecycle, safeguarding against undetected drops in the model's performance after deployment. Traditionally, performance monitoring has required access to ground truth labels, which are not always readily available. This can result in unacceptable latency or render performance monitoring altogether impossible. Recently, methods designed to estimate the accuracy of classifier models without access to labels have shown promising results. However, there are various other metrics that might be more suitable for assessing model performance in many cases. Until now, none of these important metrics has received similar interest from the scientific community. In this work, we address this gap by presenting CBPE, a novel method that can estimate any binary classification metric defined using the confusion matrix. In particular, we choose four metrics from this large family: accuracy, precision, recall, and F$_1$, to demonstrate our method. CBPE treats the elements of the confusion matrix as random variables and leverages calibrated confidence scores of the model to estimate their distributions. The desired metric is then also treated as a random variable, whose full probability distribution can be derived from the estimated confusion matrix. CBPE is shown to produce estimates that come with strong theoretical guarantees and valid confidence intervals. less

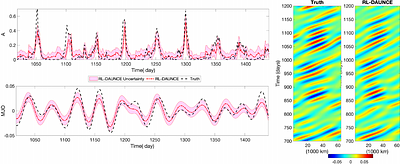

By: Pouria Behnoudfar, Nan Chen

Machine learning has become a powerful tool for enhancing data assimilation. While supervised learning remains the standard method, reinforcement learning (RL) offers unique advantages through its sequential decision-making framework, which naturally fits the iterative nature of data assimilation by dynamically balancing model forecasts with observations. We develop RL-DAUNCE, a new RL-based method that enhances data assimilation with physica... more

Machine learning has become a powerful tool for enhancing data assimilation. While supervised learning remains the standard method, reinforcement learning (RL) offers unique advantages through its sequential decision-making framework, which naturally fits the iterative nature of data assimilation by dynamically balancing model forecasts with observations. We develop RL-DAUNCE, a new RL-based method that enhances data assimilation with physical constraints through three key aspects. First, RL-DAUNCE inherits the computational efficiency of machine learning while it uniquely structures its agents to mirror ensemble members in conventional data assimilation methods. Second, RL-DAUNCE emphasizes uncertainty quantification by advancing multiple ensemble members, moving beyond simple mean-state optimization. Third, RL-DAUNCE's ensemble-as-agents design facilitates the enforcement of physical constraints during the assimilation process, which is crucial to improving the state estimation and subsequent forecasting. A primal-dual optimization strategy is developed to enforce constraints, which dynamically penalizes the reward function to ensure constraint satisfaction throughout the learning process. Also, state variable bounds are respected by constraining the RL action space. Together, these features ensure physical consistency without sacrificing efficiency. RL-DAUNCE is applied to the Madden-Julian Oscillation, an intermittent atmospheric phenomenon characterized by strongly non-Gaussian features and multiple physical constraints. RL-DAUNCE outperforms the standard ensemble Kalman filter (EnKF), which fails catastrophically due to the violation of physical constraints. Notably, RL-DAUNCE matches the performance of constrained EnKF, particularly in recovering intermittent signals, capturing extreme events, and quantifying uncertainties, while requiring substantially less computational effort. less

By: Ruxue Shi, Hengrui Gu, Hangting Ye, Yiwei Dai, Xu Shen, Xin Wang

Few-shot tabular learning, in which machine learning models are trained with a limited amount of labeled data, provides a cost-effective approach to addressing real-world challenges. The advent of Large Language Models (LLMs) has sparked interest in leveraging their pre-trained knowledge for few-shot tabular learning. Despite promising results, existing approaches either rely on test-time knowledge extraction, which introduces undesirable lat... more

Few-shot tabular learning, in which machine learning models are trained with a limited amount of labeled data, provides a cost-effective approach to addressing real-world challenges. The advent of Large Language Models (LLMs) has sparked interest in leveraging their pre-trained knowledge for few-shot tabular learning. Despite promising results, existing approaches either rely on test-time knowledge extraction, which introduces undesirable latency, or text-level knowledge, which leads to unreliable feature engineering. To overcome these limitations, we propose Latte, a training-time knowledge extraction framework that transfers the latent prior knowledge within LLMs to optimize a more generalized downstream model. Latte enables general knowledge-guided downstream tabular learning, facilitating the weighted fusion of information across different feature values while reducing the risk of overfitting to limited labeled data. Furthermore, Latte is compatible with existing unsupervised pre-training paradigms and effectively utilizes available unlabeled samples to overcome the performance limitations imposed by an extremely small labeled dataset. Extensive experiments on various few-shot tabular learning benchmarks demonstrate the superior performance of Latte, establishing it as a state-of-the-art approach in this domain less

By: Robert Busa-Fekete, Travis Dick, Claudio Gentile, Haim Kaplan, Tomer Koren, Uri Stemmer

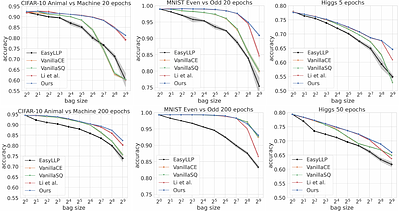

We investigate Learning from Label Proportions (LLP), a partial information setting where examples in a training set are grouped into bags, and only aggregate label values in each bag are available. Despite the partial observability, the goal is still to achieve small regret at the level of individual examples. We give results on the sample complexity of LLP under square loss, showing that our sample complexity is essentially optimal. From an... more

We investigate Learning from Label Proportions (LLP), a partial information setting where examples in a training set are grouped into bags, and only aggregate label values in each bag are available. Despite the partial observability, the goal is still to achieve small regret at the level of individual examples. We give results on the sample complexity of LLP under square loss, showing that our sample complexity is essentially optimal. From an algorithmic viewpoint, we rely on carefully designed variants of Empirical Risk Minimization, and Stochastic Gradient Descent algorithms, combined with ad hoc variance reduction techniques. On one hand, our theoretical results improve in important ways on the existing literature on LLP, specifically in the way the sample complexity depends on the bag size. On the other hand, we validate our algorithmic solutions on several datasets, demonstrating improved empirical performance (better accuracy for less samples) against recent baselines. less

By: Nataliia Klievtsova, Timotheus Kampik, Juergen Mangler, Stefanie Rinderle-Ma

With the recent success of large language models (LLMs), the idea of AI-augmented Business Process Management systems is becoming more feasible. One of their essential characteristics is the ability to be conversationally actionable, allowing humans to interact with the LLM effectively to perform crucial process life cycle tasks such as process model design and redesign. However, most current research focuses on single-prompt execution and ev... more

With the recent success of large language models (LLMs), the idea of AI-augmented Business Process Management systems is becoming more feasible. One of their essential characteristics is the ability to be conversationally actionable, allowing humans to interact with the LLM effectively to perform crucial process life cycle tasks such as process model design and redesign. However, most current research focuses on single-prompt execution and evaluation of results, rather than on continuous interaction between the user and the LLM. In this work, we aim to explore the feasibility of using LLMs to empower domain experts in the creation and redesign of process models in an iterative and effective way. The proposed conversational process model redesign (CPD) approach receives as input a process model and a redesign request by the user in natural language. Instead of just letting the LLM make changes, the LLM is employed to (a) identify process change patterns from literature, (b) re-phrase the change request to be aligned with an expected wording for the identified pattern (i.e., the meaning), and then to (c) apply the meaning of the change to the process model. This multi-step approach allows for explainable and reproducible changes. In order to ensure the feasibility of the CPD approach, and to find out how well the patterns from literature can be handled by the LLM, we performed an extensive evaluation. The results show that some patterns are hard to understand by LLMs and by users. Within the scope of the study, we demonstrated that users need support to describe the changes clearly. Overall the evaluation shows that the LLMs can handle most changes well according to a set of completeness and correctness criteria. less

4 SciCasts by .

By: Marvin F. da Silva, Felix Dangel, Sageev Oore

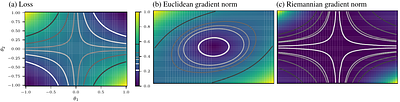

The concept of sharpness has been successfully applied to traditional architectures like MLPs and CNNs to predict their generalization. For transformers, however, recent work reported weak correlation between flatness and generalization. We argue that existing sharpness measures fail for transformers, because they have much richer symmetries in their attention mechanism that induce directions in parameter space along which the network or its ... more

The concept of sharpness has been successfully applied to traditional architectures like MLPs and CNNs to predict their generalization. For transformers, however, recent work reported weak correlation between flatness and generalization. We argue that existing sharpness measures fail for transformers, because they have much richer symmetries in their attention mechanism that induce directions in parameter space along which the network or its loss remain identical. We posit that sharpness must account fully for these symmetries, and thus we redefine it on a quotient manifold that results from quotienting out the transformer symmetries, thereby removing their ambiguities. Leveraging tools from Riemannian geometry, we propose a fully general notion of sharpness, in terms of a geodesic ball on the symmetry-corrected quotient manifold. In practice, we need to resort to approximating the geodesics. Doing so up to first order yields existing adaptive sharpness measures, and we demonstrate that including higher-order terms is crucial to recover correlation with generalization. We present results on diagonal networks with synthetic data, and show that our geodesic sharpness reveals strong correlation for real-world transformers on both text and image classification tasks. less

By: Zhaohan Feng, Ruiqi Xue, Lei Yuan, Yang Yu, Ning Ding, Meiqin Liu, Bingzhao Gao, Jian Sun, Gang Wang

Embodied artificial intelligence (Embodied AI) plays a pivotal role in the application of advanced technologies in the intelligent era, where AI systems are integrated with physical bodies that enable them to perceive, reason, and interact with their environments. Through the use of sensors for input and actuators for action, these systems can learn and adapt based on real-world feedback, allowing them to perform tasks effectively in dynamic ... more

Embodied artificial intelligence (Embodied AI) plays a pivotal role in the application of advanced technologies in the intelligent era, where AI systems are integrated with physical bodies that enable them to perceive, reason, and interact with their environments. Through the use of sensors for input and actuators for action, these systems can learn and adapt based on real-world feedback, allowing them to perform tasks effectively in dynamic and unpredictable environments. As techniques such as deep learning (DL), reinforcement learning (RL), and large language models (LLMs) mature, embodied AI has become a leading field in both academia and industry, with applications spanning robotics, healthcare, transportation, and manufacturing. However, most research has focused on single-agent systems that often assume static, closed environments, whereas real-world embodied AI must navigate far more complex scenarios. In such settings, agents must not only interact with their surroundings but also collaborate with other agents, necessitating sophisticated mechanisms for adaptation, real-time learning, and collaborative problem-solving. Despite increasing interest in multi-agent systems, existing research remains narrow in scope, often relying on simplified models that fail to capture the full complexity of dynamic, open environments for multi-agent embodied AI. Moreover, no comprehensive survey has systematically reviewed the advancements in this area. As embodied AI rapidly evolves, it is crucial to deepen our understanding of multi-agent embodied AI to address the challenges presented by real-world applications. To fill this gap and foster further development in the field, this paper reviews the current state of research, analyzes key contributions, and identifies challenges and future directions, providing insights to guide innovation and progress in this field. less