Robustly interrogating machine learning based scoring functions: what are they learning?

Robustly interrogating machine learning based scoring functions: what are they learning?

Durant, G.; Boyles, F.; Birchall, K.; Marsden, B.; Deane, C.

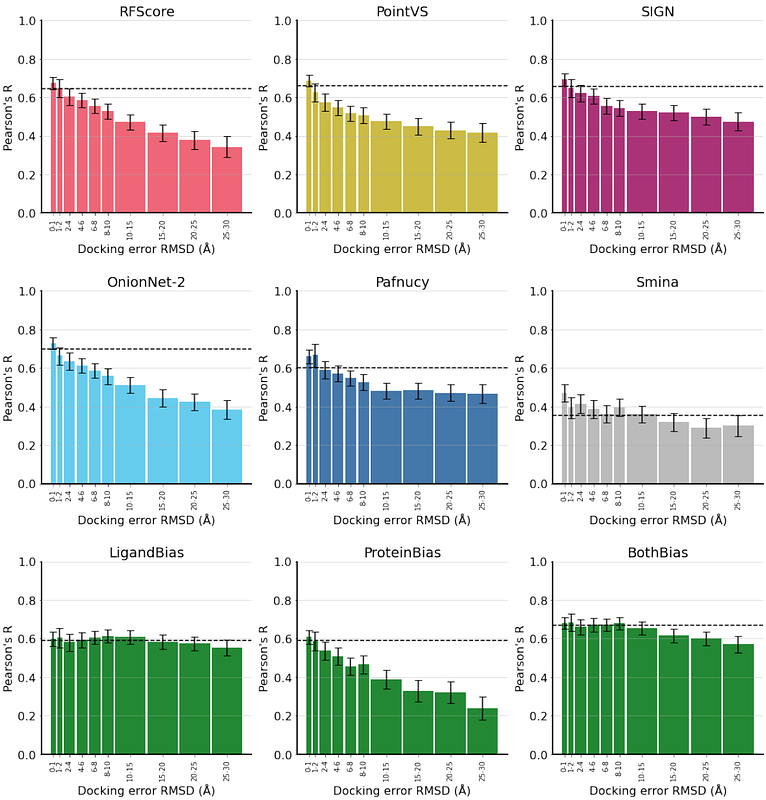

AbstractMachine learning-based scoring functions (MLBSFs) have been found to exhibit inconsistent performance on different benchmarks and be prone to learning dataset bias. For the field to develop MLBSFs that learn a generalisable understanding of physics, a more rigorous understanding of how they perform is required. In this work, we compared the performance of a diverse set of popular MLBSFs (RFScore, SIGN, OnionNet-2, Pafnucy, and PointVS) to our proposed baseline models that can only learn dataset biases on a range of benchmarks. We found that these baseline models were competitive in accuracy to these MLBSFs in almost all proposed benchmarks, indicating these models only learn dataset biases. Our tests and provided platform, ToolBoxSF, will enable researchers to robustly interrogate MLBSF performance and determine the effect of dataset biases on their predictions. Data and code is available at https://github.com/guydurant/toolboxsf.