Scaling unlocks broader generation and deeper functional understanding of proteins

Scaling unlocks broader generation and deeper functional understanding of proteins

Bhatnagar, A.; Jain, S.; Beazer, J.; Curran, S. C.; Hoffnagle, A. M.; Ching, K.; Martyn, M.; Nayfach, S.; Ruffolo, J. A.; Madani, A.

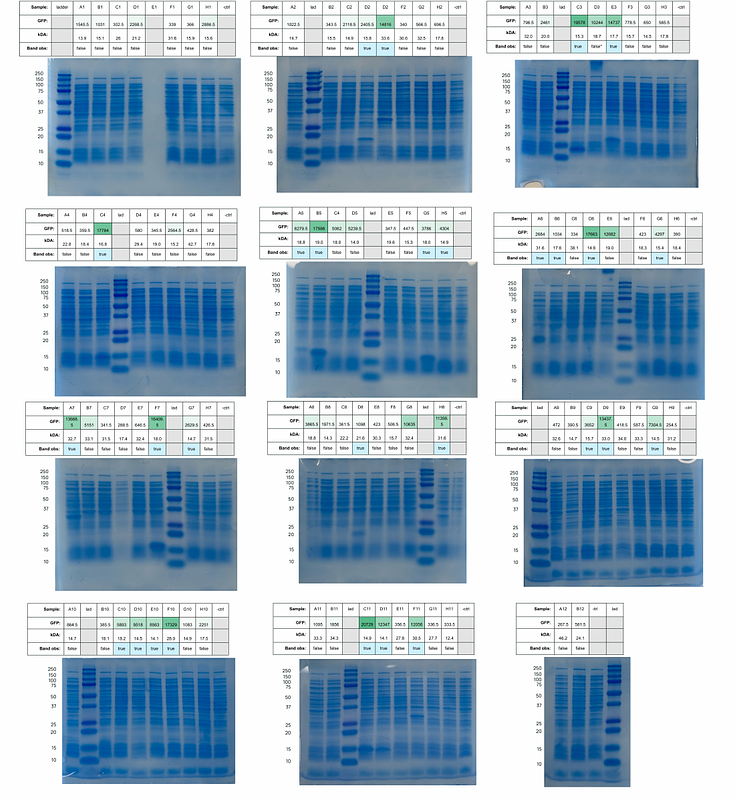

AbstractGenerative protein language models (PLMs) are powerful tools for designing proteins purpose-built to solve problems in medicine, agriculture, and industrial processes. Recent work has trained ever larger language models, but there has been little systematic study of the optimal training distributions and the influence of model scale on the sequences generated by PLMs. We introduce the ProGen3 family of sparse generative PLMs, and we develop compute-optimal scaling laws to scale up to a 46B-parameter model pre-trained on 1.5T amino acid tokens. ProGen3's pre-training data is sampled from an optimized data distribution over the Profluent Protein Atlas v1, a carefully curated dataset of 3.4B full-length proteins. We evaluate for the first time in the wet lab the influence of model scale on the sequences generated by PLMs, and we find that larger models generate viable proteins for a much wider diversity of protein families. Finally, we find both computationally and experimentally that larger models are more responsive to alignment with laboratory data, resulting in improved protein fitness prediction and sequence generation capabilities. These results indicate that larger PLMs like ProGen3-46B trained on larger, well-curated datasets are powerful foundation models that push the frontier of protein design.