ColAG: A Collaborative Air-Ground Framework for Perception-Limited UGVs' Navigation

ColAG: A Collaborative Air-Ground Framework for Perception-Limited UGVs' Navigation

Zhehan Li, Rui Mao, Nanhe Chen, Chao Xu, Fei Gao, Yanjun Cao

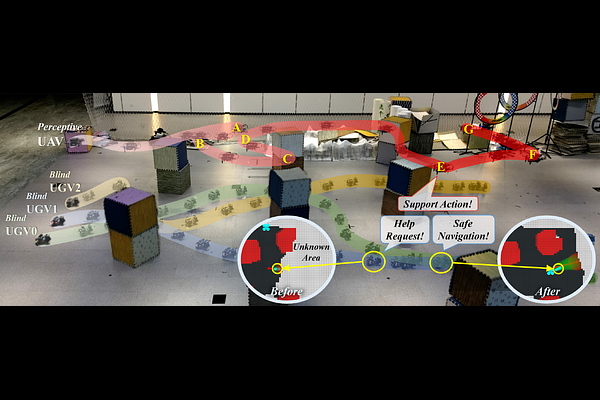

AbstractPerception is necessary for autonomous navigation in an unknown area crowded with obstacles. It's challenging for a robot to navigate safely without any sensors that can sense the environment, resulting in a $\textit{blind}$ robot, and becomes more difficult when comes to a group of robots. However, it could be costly to equip all robots with expensive perception or SLAM systems. In this paper, we propose a novel system named $\textbf{ColAG}$, to solve the problem of autonomous navigation for a group of $\textit{blind}$ UGVs by introducing cooperation with one UAV, which is the only robot that has full perception capabilities in the group. The UAV uses SLAM for its odometry and mapping while sharing this information with UGVs via limited relative pose estimation. The UGVs plan their trajectories in the received map and predict possible failures caused by the uncertainty of its wheel odometry and unknown risky areas. The UAV dynamically schedules waypoints to prevent UGVs from collisions, formulated as a Vehicle Routing Problem with Time Windows to optimize the UAV's trajectories and minimize time when UGVs have to wait to guarantee safety. We validate our system through extensive simulation with up to 7 UGVs and real-world experiments with 3 UGVs.