The Un-Kidnappable Robot: Acoustic Localization of Sneaking People

Voice is AI-generated

Connected to paperThis paper is a preprint and has not been certified by peer review

The Un-Kidnappable Robot: Acoustic Localization of Sneaking People

Mengyu Yang, Patrick Grady, Samarth Brahmbhatt, Arun Balajee Vasudevan, Charles C. Kemp, James Hays

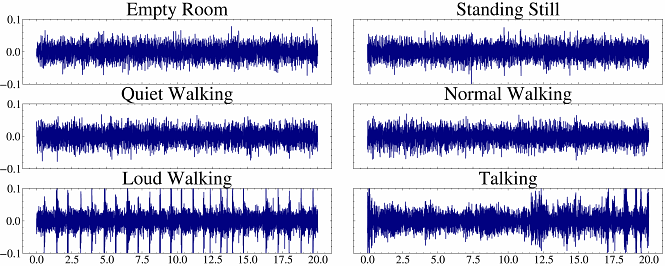

AbstractHow easy is it to sneak up on a robot? We examine whether we can detect people using only the incidental sounds they produce as they move, even when they try to be quiet. We collect a robotic dataset of high-quality 4-channel audio paired with 360 degree RGB data of people moving in different indoor settings. We train models that predict if there is a moving person nearby and their location using only audio. We implement our method on a robot, allowing it to track a single person moving quietly with only passive audio sensing. For demonstration videos, see our project page: https://sites.google.com/view/unkidnappable-robot