DEEP LEARNING-BASED HIGH-THROUGHPUT PHENOTYPING OF MAIZE (Zea mays L.) TASSELING FROM UAS IMAGERY ACROSS ENVIRONMENTS

DEEP LEARNING-BASED HIGH-THROUGHPUT PHENOTYPING OF MAIZE (Zea mays L.) TASSELING FROM UAS IMAGERY ACROSS ENVIRONMENTS

Shepard, N. R.; DeSalvio, A. J.; Arik, M.; Adak, A.; Murray, S. C.; Varela, J. I.; de Leon, N.

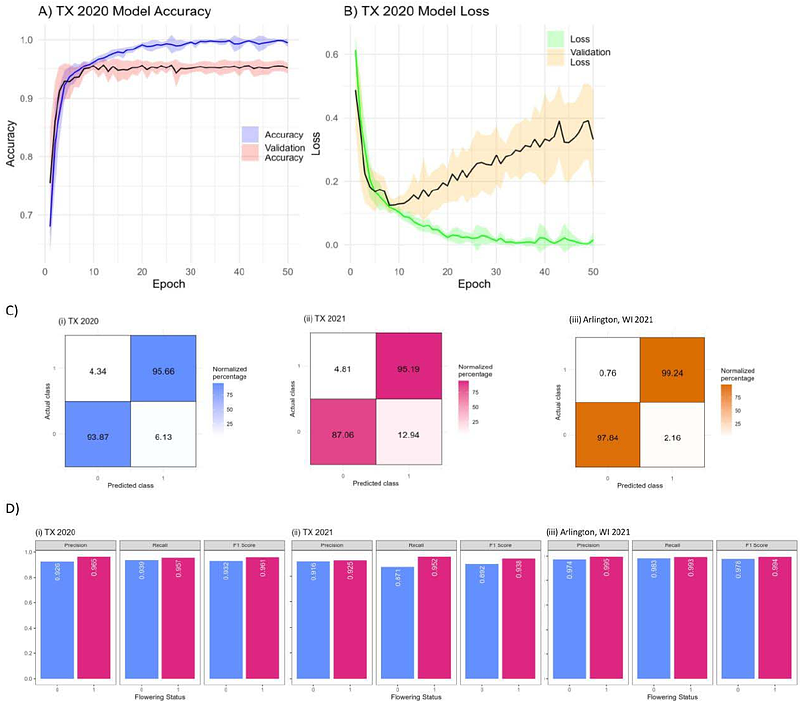

AbstractFlowering time is a critical phenological trait in maize (Zea mays L.) breeding programs. Traditional measurements for assessing flowering time involve semi-subjective and labor-intensive manual observation, limiting the scale and efficiency of genetics and breeding improvement. Leveraging unoccupied aerial system (UAS, also known as UAVs or drones) technology coupled with convolutional neural networks (CNNs) presents a promising approach for high-throughput phenotyping of tasseling in maize. Most CNN image analysis is overly complicated for simple tasks relevant to plant scientists. Here a methodology for extracting tasseling from RGB imagery using a CNN-based approach was applied to 220 hybrids and 30 test lines grown in eight diverse environments (Wisconsin and Texas, U.S.A.) then validated through an unrelated set of hybrids. Overall accuracies of .946, .911, .985, and .988 were obtained for classifying maize images with or without tassels from College Station, TX in 2020; College Station, TX in 2021; Arlington, WI in 2021; and Madison, WI in 2021 respectively. By employing deep learning techniques, larger volumes of phenotypic data can be processed enabling high-throughput phenotyping in breeding programs. Although large datasets are required to train CNN models, the proposed methodology prioritizes simplicity in computational architecture while maintaining effectiveness in identifying flowered maize across diverse genotypes and environments.