Neural hierarchy for coding articulatory dynamics in speech imagery and production

Neural hierarchy for coding articulatory dynamics in speech imagery and production

Zhao, Z.; Wang, Z.; Liu, Y.; Qian, Y.; Yin, Y.; Gao, X.; Yuan, B.; Tong, S. X.; Tian, X.; Chen, G.; Li, Y.; Lu, J.; Wu, J.

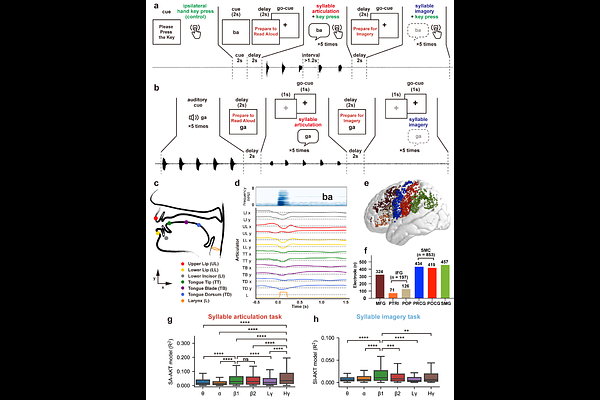

AbstractMental imagery is a hallmark of human cognition, yet the neural mechanisms underlying these internal states remain poorly understood. Speech imagery, the internal simulation of speech without overt articulation, has been proposed to partially share neural substrates with actual speech articulation. However, the precise feature encoding and spatiotemporal dynamics of this neural architecture remain controversial, constraining the understanding of mental states and the development of reliable speech imagery decoders. Here, we leveraged high-resolution electrocorticography recordings to investigate the shared and modality-specific cortical coding of articulatory kinematic trajectories (AKTs) during speech imagery and articulation. Applying a linear model, we identified robust neural dynamics in frontoparietal cortex that encoded AKTs across both modalities. Shared neural populations across the middle premotor cortex, subcentral gyrus, and postcentral-supramarginal junction exhibited consistent spatiotemporal stability during the integrative articulatory planning. In contrast, modality-specific populations for speech imagery and articulation were somatotopically interleaved along the primary sensorimotor cortex, revealing a hierarchical spatiotemporal organization distinct from shared encoding regions. We further developed a generalized neural network to decode multi-population neural dynamics. The model achieved high syllable prediction accuracy for speech imagery (79% median accuracy), closely matching the performance of speech articulation (81%). This model robustly extrapolated AKT decoding to untrained syllables within each modality while demonstrating cross-modal generalization across shared populations. These findings uncover a somato-cognitive hierarchy linking high-level supramodal planning with modality-specific neural manifestation, revolutionizing an imagery-based brain-computer interface that directly decodes thoughts for synthetic telepathy.