By: Stephanie M. Lukin, Kimberly A. Pollard, Claire Bonial, Taylor Hudson, Ron Arstein, Clare Voss, David Traum

Human-guided robotic exploration is a useful approach to gathering information at remote locations, especially those that might be too risky, inhospitable, or inaccessible for humans. Maintaining common ground between the remotely-located partners is a challenge, one that can be facilitated by multi-modal communication. In this paper, we explore how participants utilized multiple modalities to investigate a remote location with the help of ... more

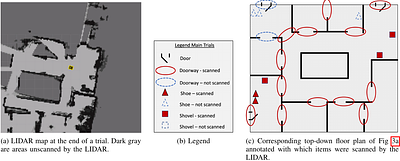

Human-guided robotic exploration is a useful approach to gathering information at remote locations, especially those that might be too risky, inhospitable, or inaccessible for humans. Maintaining common ground between the remotely-located partners is a challenge, one that can be facilitated by multi-modal communication. In this paper, we explore how participants utilized multiple modalities to investigate a remote location with the help of a robotic partner. Participants issued spoken natural language instructions and received from the robot: text-based feedback, continuous 2D LIDAR mapping, and upon-request static photographs. We noticed that different strategies were adopted in terms of use of the modalities, and hypothesize that these differences may be correlated with success at several exploration sub-tasks. We found that requesting photos may have improved the identification and counting of some key entities (doorways in particular) and that this strategy did not hinder the amount of overall area exploration. Future work with larger samples may reveal the effects of more nuanced photo and dialogue strategies, which can inform the training of robotic agents. Additionally, we announce the release of our unique multi-modal corpus of human-robot communication in an exploration context: SCOUT, the Situated Corpus on Understanding Transactions. less

By: Peiling Jiang, Li Feng, Fuling Sun, Parakrant Sarkar, Haijun Xia, Can Liu

Existing text selection techniques on touchscreen focus on improving the control for moving the carets. Coarse-grained text selection on word and phrase levels has not received much support beyond word-snapping and entity recognition. We introduce 1D-Touch, a novel text selection method that complements the carets-based sub-word selection by facilitating the selection of semantic units of words and above. This method employs a simple vertic... more

Existing text selection techniques on touchscreen focus on improving the control for moving the carets. Coarse-grained text selection on word and phrase levels has not received much support beyond word-snapping and entity recognition. We introduce 1D-Touch, a novel text selection method that complements the carets-based sub-word selection by facilitating the selection of semantic units of words and above. This method employs a simple vertical slide gesture to expand and contract a selection area from a word. The expansion can be by words or by semantic chunks ranging from sub-phrases to sentences. This technique shifts the concept of text selection, from defining a range by locating the first and last words, towards a dynamic process of expanding and contracting a textual semantic entity. To understand the effects of our approach, we prototyped and tested two variants: WordTouch, which offers a straightforward word-by-word expansion, and ChunkTouch, which leverages NLP to chunk text into syntactic units, allowing the selection to grow by semantically meaningful units in response to the sliding gesture. Our evaluation, focused on the coarse-grained selection tasks handled by 1D-Touch, shows a 20% improvement over the default word-snapping selection method on Android. less

By: Marika Malaspina, Jessica Amianto Barbato, Marco Cremaschi, Francesca Gasparini, Alessandra Grossi, Aurora Saibene

In the video game industry, great importance is given to the experience that the user has while playing a game. In particular, this experience benefits from the players' perceived sense of being in the game or immersion. The level of user immersion depends not only on the game's content but also on how the game is displayed, thus on its User Interface (UI) and the Head's-Up Display (HUD). Another factor influencing immersiveness that has be... more

In the video game industry, great importance is given to the experience that the user has while playing a game. In particular, this experience benefits from the players' perceived sense of being in the game or immersion. The level of user immersion depends not only on the game's content but also on how the game is displayed, thus on its User Interface (UI) and the Head's-Up Display (HUD). Another factor influencing immersiveness that has been found in the literature is the player's expertise: the more experience the user has with a specific game, the less they need information on the screen to be immersed in the game. Player's level of immersion can be accessed by using both questionnaires of their perceived experience and exploiting their behavioural and physiological responses while playing the target game. Therefore, in this paper, we propose an experimental protocol to access immersiveness of gamers while playing a third-person shooter (Fortnite) with UIs with a standard, a dietetic, and a proposed HUD. A subjective evaluation of the immersion will be provided by completing the Immersive Experience Questionnaire (IEQ), while objective indicators will be provided by face tracking, behaviour and physiological responses analyses. The ultimate goal of this study is to define guidelines for video game UI development that can enhance the players' immersion. less

By: Martin Johannes Dechant, Olga Lukashova-Sanz, Siegfried Wahl

Trust is essential for our interactions with others but also with artificial intelligence (AI) based systems. To understand whether a user trusts an AI, researchers need reliable measurement tools. However, currently discussed markers mostly rely on expensive and invasive sensors, like electroencephalograms, which may cause discomfort. The analysis of gaze data has been suggested as a convenient tool for trust assessment. However, the relat... more

Trust is essential for our interactions with others but also with artificial intelligence (AI) based systems. To understand whether a user trusts an AI, researchers need reliable measurement tools. However, currently discussed markers mostly rely on expensive and invasive sensors, like electroencephalograms, which may cause discomfort. The analysis of gaze data has been suggested as a convenient tool for trust assessment. However, the relationship between trust and several aspects of the gaze behaviour is not yet fully understood. To provide more insights into this relationship, we propose a exploration study in virtual reality where participants have to perform a sorting task together with a simulated AI in a simulated robotic arm embedded in a gaming. We discuss the potential benefits of this approach and outline our study design in this submission. less

By: Niki Maria Foteinopoulou, Ioannis Patras

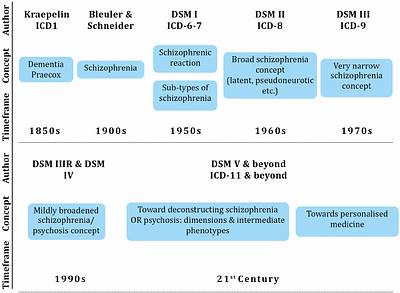

Schizophrenia is a severe yet treatable mental disorder, it is diagnosed using a multitude of primary and secondary symptoms. Diagnosis and treatment for each individual depends on the severity of the symptoms, therefore there is a need for accurate, personalised assessments. However, the process can be both time-consuming and subjective; hence, there is a motivation to explore automated methods that can offer consistent diagnosis and preci... more

Schizophrenia is a severe yet treatable mental disorder, it is diagnosed using a multitude of primary and secondary symptoms. Diagnosis and treatment for each individual depends on the severity of the symptoms, therefore there is a need for accurate, personalised assessments. However, the process can be both time-consuming and subjective; hence, there is a motivation to explore automated methods that can offer consistent diagnosis and precise symptom assessments, thereby complementing the work of healthcare practitioners. Machine Learning has demonstrated impressive capabilities across numerous domains, including medicine; the use of Machine Learning in patient assessment holds great promise for healthcare professionals and patients alike, as it can lead to more consistent and accurate symptom estimation.This survey aims to review methodologies that utilise Machine Learning for diagnosis and assessment of schizophrenia. Contrary to previous reviews that primarily focused on binary classification, this work recognises the complexity of the condition and instead, offers an overview of Machine Learning methods designed for fine-grained symptom estimation. We cover multiple modalities, namely Medical Imaging, Electroencephalograms and Audio-Visual, as the illness symptoms can manifest themselves both in a patient's pathology and behaviour. Finally, we analyse the datasets and methodologies used in the studies and identify trends, gaps as well as opportunities for future research. less

By: Jiayi Xu, Shoichi Hasegawa, Kiyoshi Kiyokawa, Naoto Ienaga, Yoshihiro Kuroda

Thermal sensation is crucial to enhancing our comprehension of the world and enhancing our ability to interact with it. Therefore, the development of thermal sensation presentation technologies holds significant potential, providing a novel method of interaction. Traditional technologies often leave residual heat in the system or the skin, affecting subsequent presentations. Our study focuses on presenting thermal sensations with low residu... more

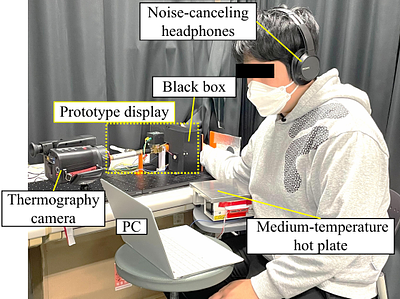

Thermal sensation is crucial to enhancing our comprehension of the world and enhancing our ability to interact with it. Therefore, the development of thermal sensation presentation technologies holds significant potential, providing a novel method of interaction. Traditional technologies often leave residual heat in the system or the skin, affecting subsequent presentations. Our study focuses on presenting thermal sensations with low residual heat, especially cold sensations. To mitigate the impact of residual heat in the presentation system, we opted for a non-contact method, and to address the influence of residual heat on the skin, we present thermal sensations without significantly altering skin temperature. Specifically, we integrated two highly responsive and independent heat transfer mechanisms: convection via cold air and radiation via visible light, providing non-contact thermal stimuli. By rapidly alternating between perceptible decreases and imperceptible increases in temperature on the same skin area, we maintained near-constant skin temperature while presenting continuous cold sensations. In our experiments involving 15 participants, we observed that when the cooling rate was -0.2 to -0.24 degree celsius per second and the cooling time ratio was 30 to 50 %, more than 86.67 % of the participants perceived only persistent cold without any warmth. less

By: Hao Wang, Qingxuan Wang, Yue Li, Changqing Wang, Chenhui Chu, Rui Wang

The use of visually-rich documents (VRDs) in various fields has created a demand for Document AI models that can read and comprehend documents like humans, which requires the overcoming of technical, linguistic, and cognitive barriers. Unfortunately, the lack of appropriate datasets has significantly hindered advancements in the field. To address this issue, we introduce \textsc{DocTrack}, a VRD dataset really aligned with human eye-movemen... more

The use of visually-rich documents (VRDs) in various fields has created a demand for Document AI models that can read and comprehend documents like humans, which requires the overcoming of technical, linguistic, and cognitive barriers. Unfortunately, the lack of appropriate datasets has significantly hindered advancements in the field. To address this issue, we introduce \textsc{DocTrack}, a VRD dataset really aligned with human eye-movement information using eye-tracking technology. This dataset can be used to investigate the challenges mentioned above. Additionally, we explore the impact of human reading order on document understanding tasks and examine what would happen if a machine reads in the same order as a human. Our results suggest that although Document AI models have made significant progress, they still have a long way to go before they can read VRDs as accurately, continuously, and flexibly as humans do. These findings have potential implications for future research and development of Document AI models. The data is available at \url{https://github.com/hint-lab/doctrack}. less

By: Zixuan Guo, Wenge Xu, Jialin Zhang, Hongyu Wang, Cheng-Hung Lo, Hai-Ning Liang

Familiarity with audiences plays a significant role in shaping individual performance and experience across various activities in everyday life. This study delves into the impact of familiarity with non-playable character (NPC) audiences on player performance and experience in virtual reality (VR) exergames. By manipulating of NPC appearance (face and body shape) and voice familiarity, we explored their effect on game performance, experienc... more

Familiarity with audiences plays a significant role in shaping individual performance and experience across various activities in everyday life. This study delves into the impact of familiarity with non-playable character (NPC) audiences on player performance and experience in virtual reality (VR) exergames. By manipulating of NPC appearance (face and body shape) and voice familiarity, we explored their effect on game performance, experience, and exertion. The findings reveal that familiar NPC audiences have a positive impact on performance, creating a more enjoyable gaming experience, and leading players to perceive less exertion. Moreover, individuals with higher levels of self-consciousness exhibit heightened sensitivity to the familiarity with NPC audiences. Our results shed light on the role of familiar NPC audiences in enhancing player experiences and provide insights for designing more engaging and personalized VR exergame environments. less

By: Andrea Tocchetti, Silvia Maria Talenti, Marco Brambilla

During the Covid-19 pandemic, research communities focused on collecting and understanding people's behaviours and feelings to study and tackle the pandemic indirect effects. Despite its consequences are slowly starting to fade away, such an interest is still alive. In this article, we propose a hybrid, gamified, story-driven data collection approach to spark self-empathy, hence resurfacing people's past feelings. The game is designed to in... more

During the Covid-19 pandemic, research communities focused on collecting and understanding people's behaviours and feelings to study and tackle the pandemic indirect effects. Despite its consequences are slowly starting to fade away, such an interest is still alive. In this article, we propose a hybrid, gamified, story-driven data collection approach to spark self-empathy, hence resurfacing people's past feelings. The game is designed to include a physical board, decks of cards, and a digital application. As the player plays through the game, they customize and escape from their lockdown room by completing statements and answering a series of questions that define their story. The decoration of the lockdown room and the storytelling-driven approach are targeted at sparking people's emotions and self-empathy towards their past selves. Ultimately, the proposed approach was proven effective in sparking and collecting feelings, while a few improvements are still necessary. less

By: Ekene Attoh, Beat Signer

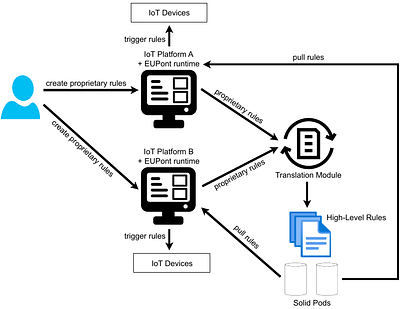

With the rise of popular task automation or IoT platforms such as 'If This Then That (IFTTT)', users can define rules to enable interactions between smart devices in their environment and thereby improve their daily lives. However, the rules authored via these platforms are usually tied to the platforms and sometimes even to the specific devices for which they have been defined. Therefore, when a user wishes to move to a different environme... more

With the rise of popular task automation or IoT platforms such as 'If This Then That (IFTTT)', users can define rules to enable interactions between smart devices in their environment and thereby improve their daily lives. However, the rules authored via these platforms are usually tied to the platforms and sometimes even to the specific devices for which they have been defined. Therefore, when a user wishes to move to a different environment controlled by a different platform and/or devices, they need to recreate their rules for the new environment. The rise in the number of smart devices further adds to the complexity of rule authoring since users will have to navigate an ever-changing landscape of IoT devices. In order to address this problem, we need human-computer interaction that works across the boundaries of specific IoT platforms and devices. A step towards this human-computer interaction across platforms and devices is the introduction of a high-level semantic model for end-user IoT development, enabling users to create rules at a higher level of abstraction. However, many users who already got used to the rule representation in their favourite tool might be unwilling to learn and adapt to a new representation. We present a method for translating proprietary rules to a high-level semantic model by using natural language processing techniques. Our translation enables users to work with their familiar rule representation language and tool, and at the same time apply their rules across different IoT platforms and devices. less