Initialization Bias of Fourier Neural Operator: Revisiting the Edge of Chaos

Initialization Bias of Fourier Neural Operator: Revisiting the Edge of Chaos

Takeshi Koshizuka, Masahiro Fujisawa, Yusuke Tanaka, Issei Sato

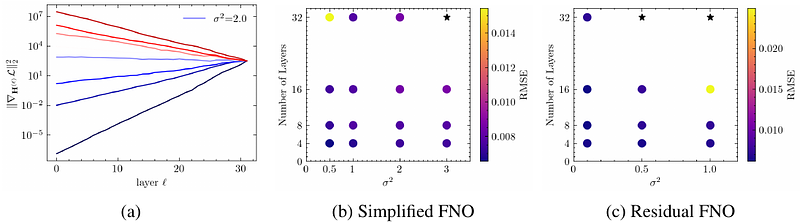

AbstractThis paper investigates the initialization bias of the Fourier neural operator (FNO). A mean-field theory for FNO is established, analyzing the behavior of the random FNO from an ``edge of chaos'' perspective. We uncover that the forward and backward propagation behaviors exhibit characteristics unique to FNO, induced by mode truncation, while also showcasing similarities to those of densely connected networks. Building upon this observation, we also propose a FNO version of the He initialization scheme to mitigate the negative initialization bias leading to training instability. Experimental results demonstrate the effectiveness of our initialization scheme, enabling stable training of a 32-layer FNO without the need for additional techniques or significant performance degradation.