Hebbian Continual Representation Learning

Hebbian Continual Representation Learning

Paweł Morawiecki, Andrii Krutsylo, Maciej Wołczyk, Marek Śmieja

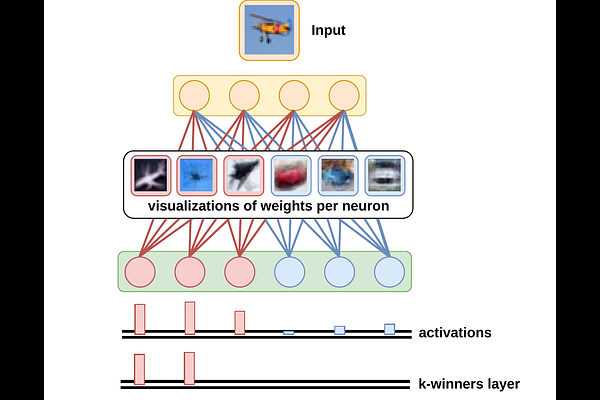

AbstractContinual Learning aims to bring machine learning into a more realistic scenario, where tasks are learned sequentially and the i.i.d. assumption is not preserved. Although this setting is natural for biological systems, it proves very difficult for machine learning models such as artificial neural networks. To reduce this performance gap, we investigate the question whether biologically inspired Hebbian learning is useful for tackling continual challenges. In particular, we highlight a realistic and often overlooked unsupervised setting, where the learner has to build representations without any supervision. By combining sparse neural networks with Hebbian learning principle, we build a simple yet effective alternative (HebbCL) to typical neural network models trained via the gradient descent. Due to Hebbian learning, the network have easily interpretable weights, which might be essential in critical application such as security or healthcare. We demonstrate the efficacy of HebbCL in an unsupervised learning setting applied to MNIST and Omniglot datasets. We also adapt the algorithm to the supervised scenario and obtain promising results in the class-incremental learning.