PocketNet: A Smaller Neural Network for Medical Image Analysis

PocketNet: A Smaller Neural Network for Medical Image Analysis

Adrian Celaya, Jonas A. Actor, Rajarajeswari Muthusivarajan, Evan Gates, Caroline Chung, Dawid Schellingerhout, Beatrice Riviere, David Fuentes

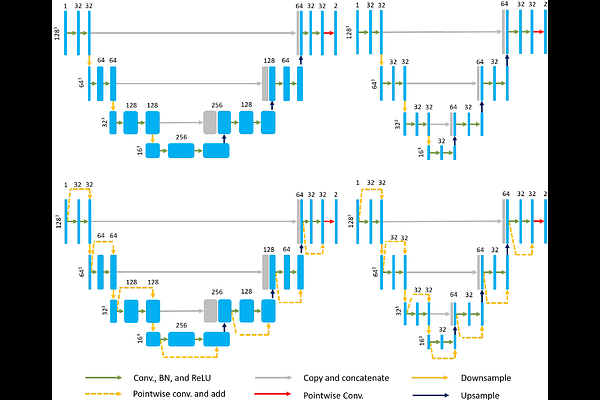

AbstractMedical imaging deep learning models are often large and complex, requiring specialized hardware to train and evaluate these models. To address such issues, we propose the PocketNet paradigm to reduce the size of deep learning models by throttling the growth of the number of channels in convolutional neural networks. We demonstrate that, for a range of segmentation and classification tasks, PocketNet architectures produce results comparable to that of conventional neural networks while reducing the number of parameters by multiple orders of magnitude, using up to 90% less GPU memory, and speeding up training times by up to 40%, thereby allowing such models to be trained and deployed in resource-constrained settings.