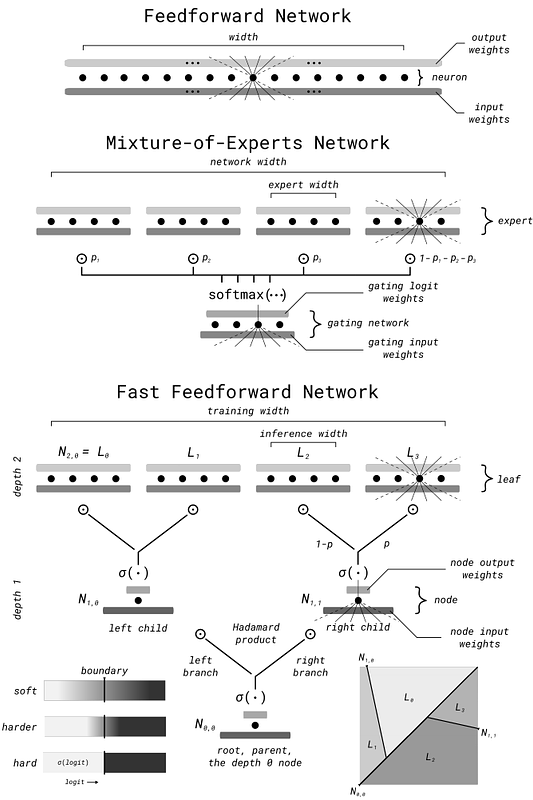

Fast Feedforward Networks

Fast Feedforward Networks

Peter Belcak, Roger Wattenhofer

AbstractWe break the linear link between the layer size and its inference cost by introducing the fast feedforward (FFF) architecture, a logarithmic-time alternative to feedforward networks. We show that FFFs give comparable performance to feedforward networks at an exponential fraction of their inference cost, are quicker to deliver performance compared to mixture-of-expert networks, and can readily take the place of either in transformers. Pushing FFFs to the absolute limit, we train a vision transformer to perform single-neuron inferences at the cost of only 5.8% performance decrease against the full-width variant. Our implementation is available as a Python package; just use "pip install fastfeedforward".