On the Last-iterate Convergence in Time-varying Zero-sum Games: Extra Gradient Succeeds where Optimism Fails

On the Last-iterate Convergence in Time-varying Zero-sum Games: Extra Gradient Succeeds where Optimism Fails

Yi Feng, Hu Fu, Qun Hu, Ping Li, Ioannis Panageas, Bo Peng, Xiao Wang

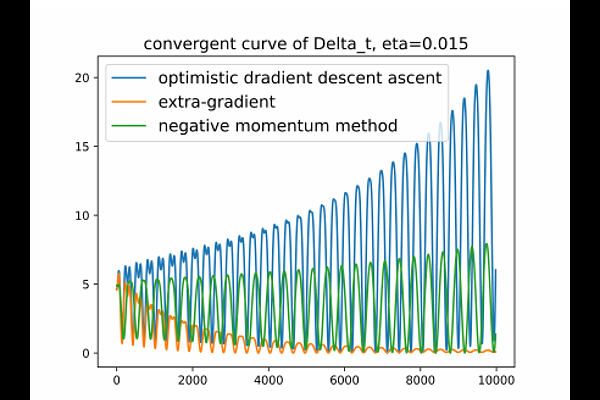

AbstractLast-iterate convergence has received extensive study in two player zero-sum games starting from bilinear, convex-concave up to settings that satisfy the MVI condition. Typical methods that exhibit last-iterate convergence for the aforementioned games include extra-gradient (EG) and optimistic gradient descent ascent (OGDA). However, all the established last-iterate convergence results hold for the restrictive setting where the underlying repeated game does not change over time. Recently, a line of research has focused on regret analysis of OGDA in time-varying games, i.e., games where payoffs evolve with time; the last-iterate behavior of OGDA and EG in time-varying environments remains unclear though. In this paper, we study the last-iterate behavior of various algorithms in two types of unconstrained, time-varying, bilinear zero-sum games: periodic and convergent perturbed games. These models expand upon the usual repeated game formulation and incorporate external environmental factors, such as the seasonal effects on species competition and vanishing external noise. In periodic games, we prove that EG will converge while OGDA and momentum method will diverge. This is quite surprising, as to the best of our knowledge, it is the first result that indicates EG and OGDA have qualitatively different last-iterate behaviors and do not exhibit similar behavior. In convergent perturbed games, we prove all these algorithms converge as long as the game itself stabilizes with a faster rate than $1/t$.