Principled Approaches for Learning to Defer with Multiple Experts

Principled Approaches for Learning to Defer with Multiple Experts

Anqi Mao, Mehryar Mohri, Yutao Zhong

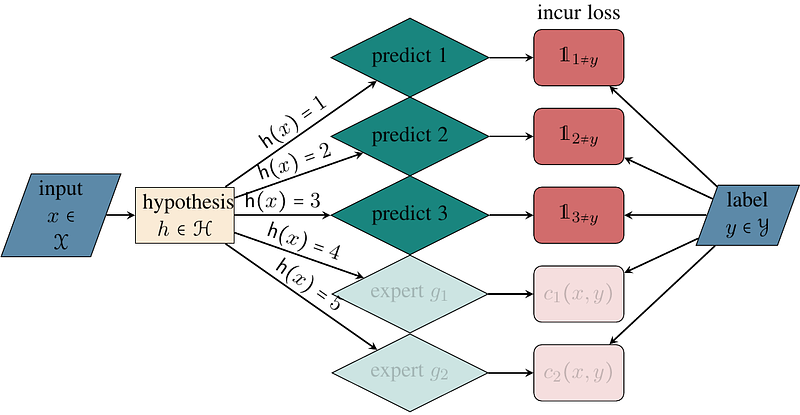

AbstractWe present a study of surrogate losses and algorithms for the general problem of learning to defer with multiple experts. We first introduce a new family of surrogate losses specifically tailored for the multiple-expert setting, where the prediction and deferral functions are learned simultaneously. We then prove that these surrogate losses benefit from strong $H$-consistency bounds. We illustrate the application of our analysis through several examples of practical surrogate losses, for which we give explicit guarantees. These loss functions readily lead to the design of new learning to defer algorithms based on their minimization. While the main focus of this work is a theoretical analysis, we also report the results of several experiments on SVHN and CIFAR-10 datasets.