Learning Balanced Field Summaries of the Large-Scale Structure with the Neural Field Scattering Transform

Learning Balanced Field Summaries of the Large-Scale Structure with the Neural Field Scattering Transform

Matthew Craigie, Yuan-Sen Ting, Rossana Ruggeri, Tamara M. Davis

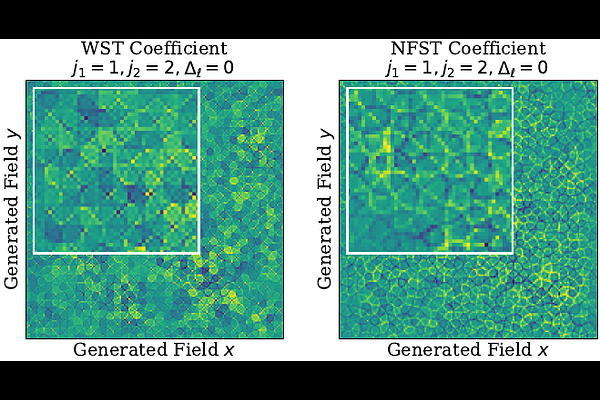

AbstractWe present a cosmology analysis of weak lensing convergence maps using the Neural Field Scattering Transform (NFST) to constrain cosmological parameters. The NFST extends the Wavelet Scattering Transform (WST) by incorporating trainable neural field filters while preserving rotational and translational symmetries. This setup balances flexibility with robustness, ideal for learning in limited training data regimes. We apply the NFST to 500 simulations from the CosmoGrid suite, each providing a total of 1000 square degrees of noiseless weak lensing convergence maps. We use the resulting learned field compression to model the posterior over $\Omega_m$, $\sigma_8$, and $w$ in a $w$CDM cosmology. The NFST consistently outperforms the WST benchmark, achieving a 16% increase in the average posterior probability density assigned to test data. Further, the NFST improves direct parameter prediction precision on $\sigma_8$ by 6% and w by 11%. We also introduce a new visualization technique to interpret the learned filters in physical space and show that the NFST adapts its feature extraction to capture task-specific information. These results establish the NFST as a promising tool for extracting maximal cosmological information from the non-Gaussian information in upcoming large-scale structure surveys, without requiring large simulated training datasets.