Statistically Valid Variable Importance Assessment through Conditional Permutations

Statistically Valid Variable Importance Assessment through Conditional Permutations

Ahmad Chamma Inria Universite Paris Saclay CEA, Denis A. Engemann Roche Pharma Research and Early Development, Neuroscience and Rare Diseases, Roche Innovation Center Basel, F. Hoffmann-La Roche Ltd., Basel, Switzerland, Bertrand Thirion Inria Universite Paris Saclay CEA

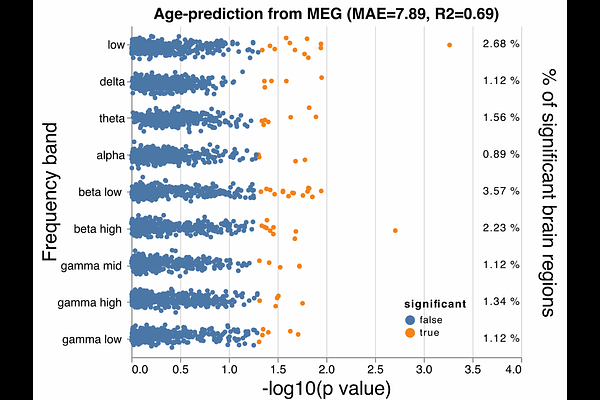

AbstractVariable importance assessment has become a crucial step in machine-learning applications when using complex learners, such as deep neural networks, on large-scale data. Removal-based importance assessment is currently the reference approach, particularly when statistical guarantees are sought to justify variable inclusion. It is often implemented with variable permutation schemes. On the flip side, these approaches risk misidentifying unimportant variables as important in the presence of correlations among covariates. Here we develop a systematic approach for studying Conditional Permutation Importance (CPI) that is model agnostic and computationally lean, as well as reusable benchmarks of state-of-the-art variable importance estimators. We show theoretically and empirically that $\textit{CPI}$ overcomes the limitations of standard permutation importance by providing accurate type-I error control. When used with a deep neural network, $\textit{CPI}$ consistently showed top accuracy across benchmarks. An empirical benchmark on real-world data analysis in a large-scale medical dataset showed that $\textit{CPI}$ provides a more parsimonious selection of statistically significant variables. Our results suggest that $\textit{CPI}$ can be readily used as drop-in replacement for permutation-based methods.