Rethinking Large-scale Pre-ranking System: Entire-chain Cross-domain Models

Rethinking Large-scale Pre-ranking System: Entire-chain Cross-domain Models

Jinbo Song Marketing and Commercialization Center, JD.com, Ruoran Huang Marketing and Commercialization Center, JD.com, Xinyang Wang Marketing and Commercialization Center, JD.com, Wei Huang Marketing and Commercialization Center, JD.com, Qian Yu Marketing and Commercialization Center, JD.com, Mingming Chen Marketing and Commercialization Center, JD.com, Yafei Yao Marketing and Commercialization Center, JD.com, Chaosheng Fan Marketing and Commercialization Center, JD.com, Changping Peng Marketing and Commercialization Center, JD.com, Zhangang Lin Marketing and Commercialization Center, JD.com, Jinghe Hu Marketing and Commercialization Center, JD.com, Jingping Shao Marketing and Commercialization Center, JD.com

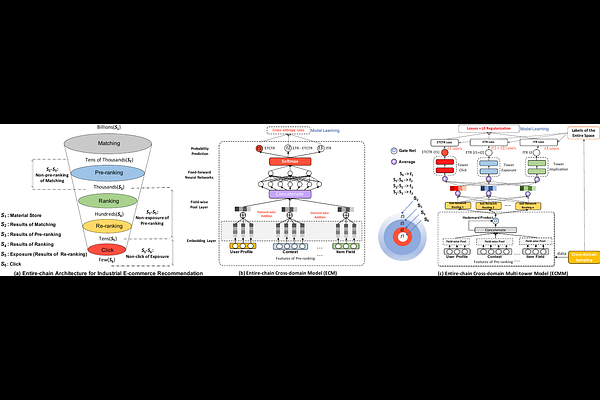

AbstractIndustrial systems such as recommender systems and online advertising, have been widely equipped with multi-stage architectures, which are divided into several cascaded modules, including matching, pre-ranking, ranking and re-ranking. As a critical bridge between matching and ranking, existing pre-ranking approaches mainly endure sample selection bias (SSB) problem owing to ignoring the entire-chain data dependence, resulting in sub-optimal performances. In this paper, we rethink pre-ranking system from the perspective of the entire sample space, and propose Entire-chain Cross-domain Models (ECM), which leverage samples from the whole cascaded stages to effectively alleviate SSB problem. Besides, we design a fine-grained neural structure named ECMM to further improve the pre-ranking accuracy. Specifically, we propose a cross-domain multi-tower neural network to comprehensively predict for each stage result, and introduce the sub-networking routing strategy with $L0$ regularization to reduce computational costs. Evaluations on real-world large-scale traffic logs demonstrate that our pre-ranking models outperform SOTA methods while time consumption is maintained within an acceptable level, which achieves better trade-off between efficiency and effectiveness.