uTalk: Bridging the Gap Between Humans and AI

uTalk: Bridging the Gap Between Humans and AI

Hussam Azzuni, Sharim Jamal, Abdulmotaleb Elsaddik

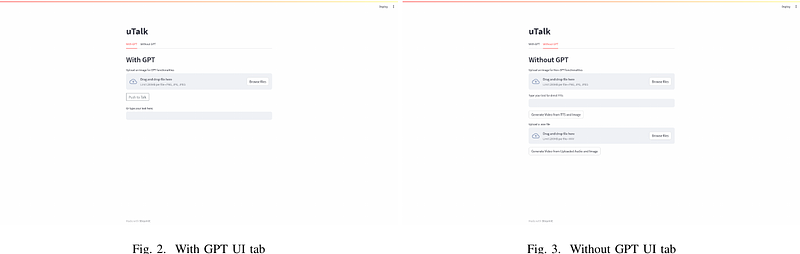

AbstractLarge Language Models (LLMs) have revolutionized various industries by harnessing their power to improve productivity and facilitate learning across different fields. One intriguing application involves combining LLMs with visual models to create a novel approach to Human-Computer Interaction. The core idea behind this system is to develop an interactive platform that allows the general public to leverage the capabilities of ChatGPT in their daily lives. This is achieved by integrating several technologies such as Whisper, ChatGPT, Microsoft Speech Services, and the state-of-the-art (SOTA) talking head system, SadTalker, resulting in uTalk, an intelligent AI system. Users will be able to converse with this portrait, receiving answers to whatever questions they have in mind. Additionally, they could use uTalk for content generation by providing an input and their image. This system is hosted on Streamlit, where the user will initially be requested to provide an image to serve as their AI assistant. Then, users could choose whether to have a conversation or generate content based on their preferences. Either way, it starts by providing an input, where a set of operations will be done, and the avatar will provide a precise response. The paper discusses how SadTalker is optimized to improve its running time by 27.72% based on 25FPS generated videos. In addition, the system's initial performance, uTalk, improved further by 9.8% after SadTalker was integrated and parallelized with Streamlit.