3D-MuPPET: 3D Multi-Pigeon Pose Estimation and Tracking

3D-MuPPET: 3D Multi-Pigeon Pose Estimation and Tracking

Urs Waldmann, Alex Hoi Hang Chan, Hemal Naik, Máté Nagy, Iain D. Couzin, Oliver Deussen, Bastian Goldluecke, Fumihiro Kano

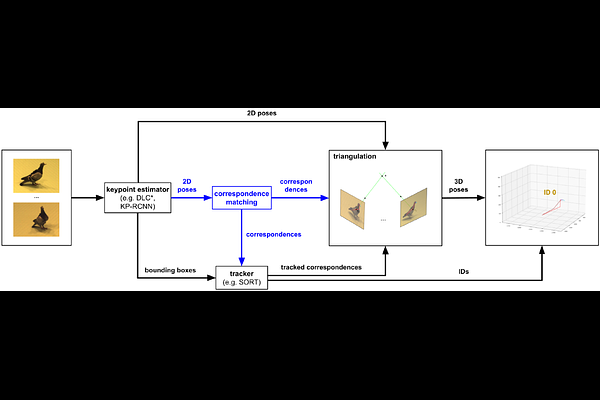

AbstractMarkerless methods for animal posture tracking have been developing recently, but frameworks and benchmarks for tracking large animal groups in 3D are still lacking. To overcome this gap in the literature, we present 3D-MuPPET, a framework to estimate and track 3D poses of up to 10 pigeons at interactive speed using multiple-views. We train a pose estimator to infer 2D keypoints and bounding boxes of multiple pigeons, then triangulate the keypoints to 3D. For correspondence matching, we first dynamically match 2D detections to global identities in the first frame, then use a 2D tracker to maintain correspondences accross views in subsequent frames. We achieve comparable accuracy to a state of the art 3D pose estimator for Root Mean Square Error (RMSE) and Percentage of Correct Keypoints (PCK). We also showcase a novel use case where our model trained with data of single pigeons provides comparable results on data containing multiple pigeons. This can simplify the domain shift to new species because annotating single animal data is less labour intensive than multi-animal data. Additionally, we benchmark the inference speed of 3D-MuPPET, with up to 10 fps in 2D and 1.5 fps in 3D, and perform quantitative tracking evaluation, which yields encouraging results. Finally, we show that 3D-MuPPET also works in natural environments without model fine-tuning on additional annotations. To the best of our knowledge we are the first to present a framework for 2D/3D posture and trajectory tracking that works in both indoor and outdoor environments.