Energy-Efficient Visual Search by Eye Movement and Low-Latency Spiking Neural Network

Energy-Efficient Visual Search by Eye Movement and Low-Latency Spiking Neural Network

Yunhui Zhou, Dongqi Han, Yuguo Yu

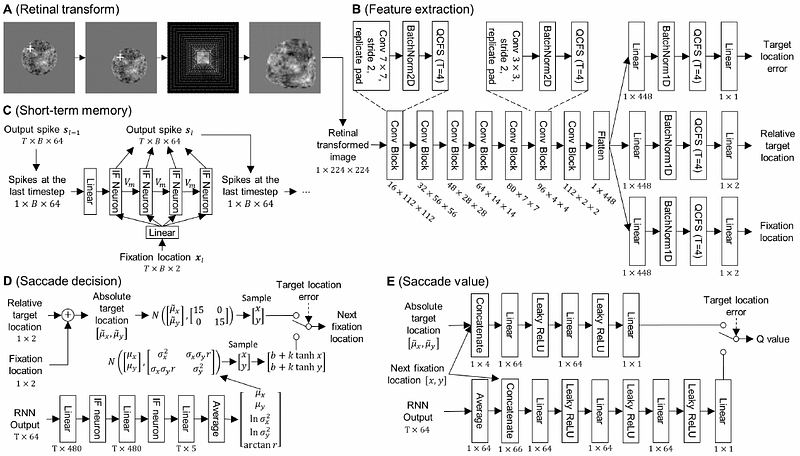

AbstractHuman vision incorporates non-uniform resolution retina, efficient eye movement strategy, and spiking neural network (SNN) to balance the requirements in visual field size, visual resolution, energy cost, and inference latency. These properties have inspired interest in developing human-like computer vision. However, existing models haven't fully incorporated the three features of human vision, and their learned eye movement strategies haven't been compared with human's strategy, making the models' behavior difficult to interpret. Here, we carry out experiments to examine human visual search behaviors and establish the first SNN-based visual search model. The model combines an artificial retina with spiking feature extraction, memory, and saccade decision modules, and it employs population coding for fast and efficient saccade decisions. The model can learn either a human-like or a near-optimal fixation strategy, outperform humans in search speed and accuracy, and achieve high energy efficiency through short saccade decision latency and sparse activation. It also suggests that the human search strategy is suboptimal in terms of search speed. Our work connects modeling of vision in neuroscience and machine learning and sheds light on developing more energy-efficient computer vision algorithms.