Heterogeneous Federated Learning with Group-Aware Prompt Tuning

Heterogeneous Federated Learning with Group-Aware Prompt Tuning

Wenlong Deng, Christos Thrampoulidis, Xiaoxiao Li

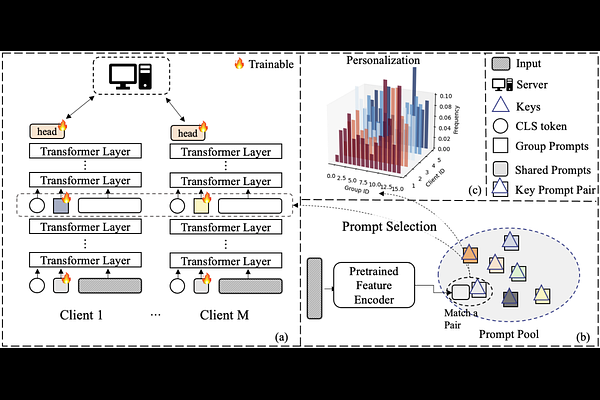

AbstractTransformers have achieved remarkable success in various machine-learning tasks, prompting their widespread adoption. In this paper, we explore their application in the context of federated learning (FL), with a particular focus on heterogeneous scenarios where individual clients possess diverse local datasets. To meet the computational and communication demands of FL, we leverage pre-trained Transformers and use an efficient prompt-tuning strategy. Our strategy introduces the concept of learning both shared and group prompts, enabling the acquisition of universal knowledge and group-specific knowledge simultaneously. Additionally, a prompt selection module assigns personalized group prompts to each input, aligning the global model with the data distribution of each client. This approach allows us to train a single global model that can automatically adapt to various local client data distributions without requiring local fine-tuning. In this way, our proposed method effectively bridges the gap between global and personalized local models in Federated Learning and surpasses alternative approaches that lack the capability to adapt to previously unseen clients. The effectiveness of our approach is rigorously validated through extensive experimentation and ablation studies.