How Helpful do Novice Programmers Find the Feedback of an Automated Repair Tool?

How Helpful do Novice Programmers Find the Feedback of an Automated Repair Tool?

Oka Kurniawan, Christopher M. Poskitt, Ismam Al Hoque, Norman Tiong Seng Lee, Cyrille Jégourel, Nachamma Sockalingam

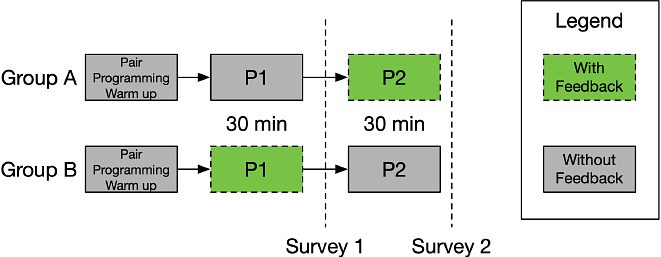

AbstractImmediate feedback has been shown to improve student learning. In programming courses, immediate, automated feedback is typically provided in the form of pre-defined test cases run by a submission platform. While these are excellent for highlighting the presence of logical errors, they do not provide novice programmers enough scaffolding to help them identify where an error is or how to fix it. To address this, several tools have been developed that provide richer feedback in the form of program repairs. Studies of such tools, however, tend to focus more on whether correct repairs can be generated, rather than how novices are using them. In this paper, we describe our experience of using CLARA, an automated repair tool, to provide feedback to novices. First, we extended CLARA to support a larger subset of the Python language, before integrating it with the Jupyter Notebooks used for our programming exercises. Second, we devised a preliminary study in which students tackled programming problems with and without support of the tool using the 'think aloud' protocol. We found that novices often struggled to understand the proposed repairs, echoing the well-known challenge to understand compiler/interpreter messages. Furthermore, we found that students valued being told where a fix was needed - without necessarily the fix itself - suggesting that 'less may be more' from a pedagogical perspective.