Exploring Non-additive Randomness on ViT against Query-Based Black-Box Attacks

Exploring Non-additive Randomness on ViT against Query-Based Black-Box Attacks

Jindong Gu, Fangyun Wei, Philip Torr, Han Hu

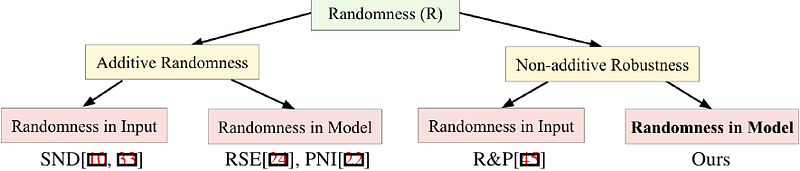

AbstractDeep Neural Networks can be easily fooled by small and imperceptible perturbations. The query-based black-box attack (QBBA) is able to create the perturbations using model output probabilities of image queries requiring no access to the underlying models. QBBA poses realistic threats to real-world applications. Recently, various types of robustness have been explored to defend against QBBA. In this work, we first taxonomize the stochastic defense strategies against QBBA. Following our taxonomy, we propose to explore non-additive randomness in models to defend against QBBA. Specifically, we focus on underexplored Vision Transformers based on their flexible architectures. Extensive experiments show that the proposed defense approach achieves effective defense, without much sacrifice in performance.