Reality3DSketch: Rapid 3D Modeling of Objects from Single Freehand Sketches

Reality3DSketch: Rapid 3D Modeling of Objects from Single Freehand Sketches

Tianrun Chen, Chaotao Ding, Lanyun Zhu, Ying Zang, Yiyi Liao, Zejian Li, Lingyun Sun

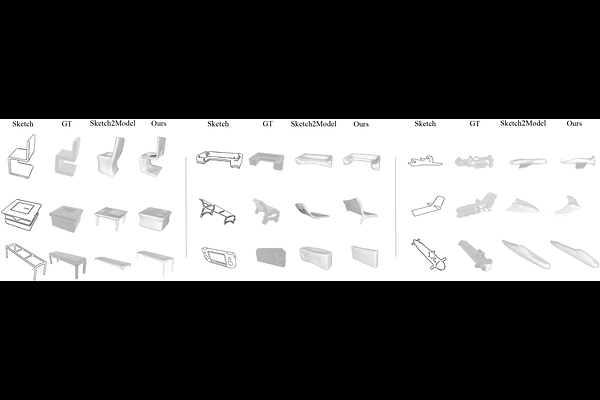

AbstractThe emerging trend of AR/VR places great demands on 3D content. However, most existing software requires expertise and is difficult for novice users to use. In this paper, we aim to create sketch-based modeling tools for user-friendly 3D modeling. We introduce Reality3DSketch with a novel application of an immersive 3D modeling experience, in which a user can capture the surrounding scene using a monocular RGB camera and can draw a single sketch of an object in the real-time reconstructed 3D scene. A 3D object is generated and placed in the desired location, enabled by our novel neural network with the input of a single sketch. Our neural network can predict the pose of a drawing and can turn a single sketch into a 3D model with view and structural awareness, which addresses the challenge of sparse sketch input and view ambiguity. We conducted extensive experiments synthetic and real-world datasets and achieved state-of-the-art (SOTA) results in both sketch view estimation and 3D modeling performance. According to our user study, our method of performing 3D modeling in a scene is $>$5x faster than conventional methods. Users are also more satisfied with the generated 3D model than the results of existing methods.