OpinSummEval: Revisiting Automated Evaluation for Opinion Summarization

OpinSummEval: Revisiting Automated Evaluation for Opinion Summarization

Yuchen Shen, Xiaojun Wan

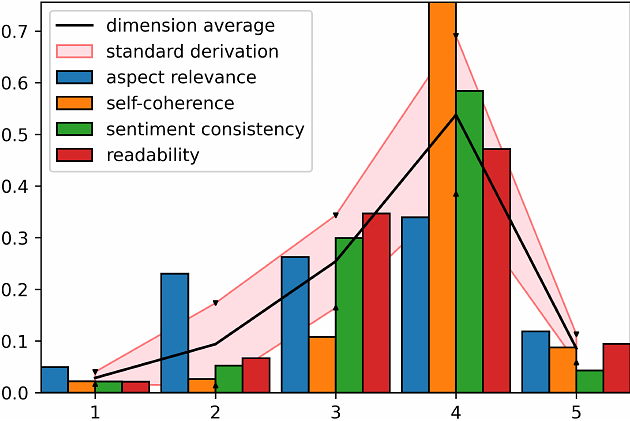

AbstractOpinion summarization sets itself apart from other types of summarization tasks due to its distinctive focus on aspects and sentiments. Although certain automated evaluation methods like ROUGE have gained popularity, we have found them to be unreliable measures for assessing the quality of opinion summaries. In this paper, we present OpinSummEval, a dataset comprising human judgments and outputs from 14 opinion summarization models. We further explore the correlation between 24 automatic metrics and human ratings across four dimensions. Our findings indicate that metrics based on neural networks generally outperform non-neural ones. However, even metrics built on powerful backbones, such as BART and GPT-3/3.5, do not consistently correlate well across all dimensions, highlighting the need for advancements in automated evaluation methods for opinion summarization. The code and data are publicly available at https://github.com/A-Chicharito-S/OpinSummEval/tree/main.