Spatial and Temporal Attention-based emotion estimation on HRI-AVC dataset

Spatial and Temporal Attention-based emotion estimation on HRI-AVC dataset

Karthik Subramanian, Saurav Singh, Justin Namba, Jamison Heard, Christopher Kanan, Ferat Sahin

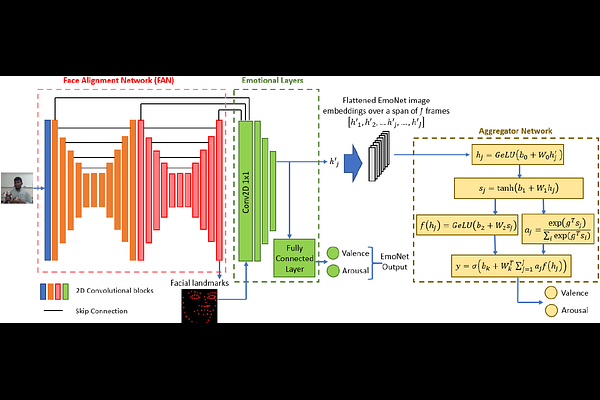

AbstractMany attempts have been made at estimating discrete emotions (calmness, anxiety, boredom, surprise, anger) and continuous emotional measures commonly used in psychology, namely `valence' (The pleasantness of the emotion being displayed) and `arousal' (The intensity of the emotion being displayed). Existing methods to estimate arousal and valence rely on learning from data sets, where an expert annotator labels every image frame. Access to an expert annotator is not always possible, and the annotation can also be tedious. Hence it is more practical to obtain self-reported arousal and valence values directly from the human in a real-time Human-Robot collaborative setting. Hence this paper provides an emotion data set (HRI-AVC) obtained while conducting a human-robot interaction (HRI) task. The self-reported pair of labels in this data set is associated with a set of image frames. This paper also proposes a spatial and temporal attention-based network to estimate arousal and valence from this set of image frames. The results show that an attention-based network can estimate valence and arousal on the HRI-AVC data set even when Arousal and Valence values are unavailable per frame.