MO-YOLO: End-to-End Multiple-Object Tracking Method with YOLO and MOTR

MO-YOLO: End-to-End Multiple-Object Tracking Method with YOLO and MOTR

Liao Pan, Yang Feng, Wu Di, Liu Bo, Zhang Xingle

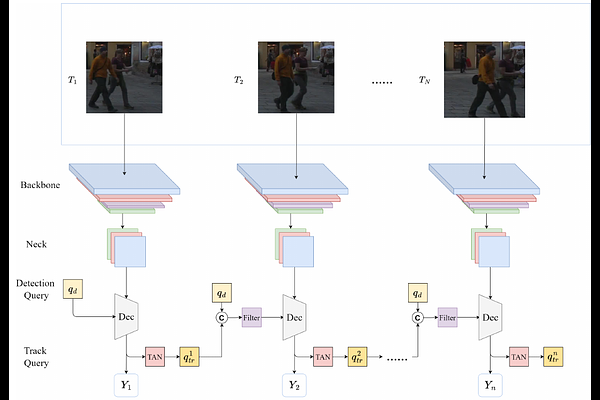

AbstractThis paper aims to address critical issues in the field of Multi-Object Tracking (MOT) by proposing an efficient and computationally resource-efficient end-to-end multi-object tracking model, named MO-YOLO. Traditional MOT methods typically involve two separate steps: object detection and object tracking, leading to computational complexity and error propagation issues. Recent research has demonstrated outstanding performance in end-to-end MOT models based on Transformer architectures, but they require substantial hardware support. MO-YOLO combines the strengths of YOLO and RT-DETR models to construct a high-efficiency, lightweight, and resource-efficient end-to-end multi-object tracking network, offering new opportunities in the multi-object tracking domain. On the MOT17 dataset, MOTR\cite{zeng2022motr} requires training with 8 GeForce 2080 Ti GPUs for 4 days to achieve satisfactory results, while MO-YOLO only requires 1 GeForce 2080 Ti GPU and 12 hours of training to achieve comparable performance.