Train Once, Forget Precisely: Anchored Optimization for Efficient Post-Hoc Unlearning

Train Once, Forget Precisely: Anchored Optimization for Efficient Post-Hoc Unlearning

Prabhav Sanga, Jaskaran Singh, Arun K. Dubey

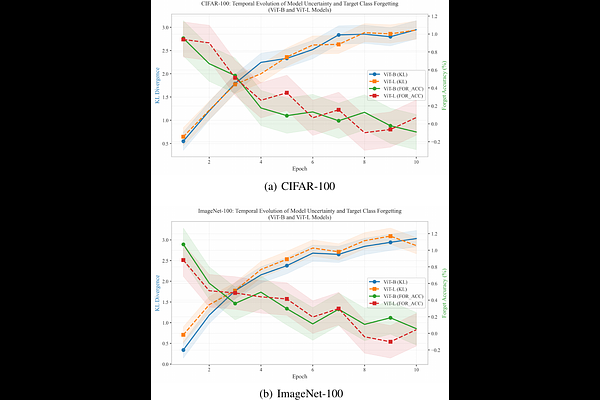

AbstractAs machine learning systems increasingly rely on data subject to privacy regulation, selectively unlearning specific information from trained models has become essential. In image classification, this involves removing the influence of particular training samples, semantic classes, or visual styles without full retraining. We introduce \textbf{Forget-Aligned Model Reconstruction (FAMR)}, a theoretically grounded and computationally efficient framework for post-hoc unlearning in deep image classifiers. FAMR frames forgetting as a constrained optimization problem that minimizes a uniform-prediction loss on the forget set while anchoring model parameters to their original values via an $\ell_2$ penalty. A theoretical analysis links FAMR's solution to influence-function-based retraining approximations, with bounds on parameter and output deviation. Empirical results on class forgetting tasks using CIFAR-10 and ImageNet-100 demonstrate FAMR's effectiveness, with strong performance retention and minimal computational overhead. The framework generalizes naturally to concept and style erasure, offering a scalable and certifiable route to efficient post-hoc forgetting in vision models.