CARSO: Blending Adversarial Training and Purification Improves Adversarial Robustness

CARSO: Blending Adversarial Training and Purification Improves Adversarial Robustness

Emanuele Ballarin, Alessio Ansuini, Luca Bortolussi

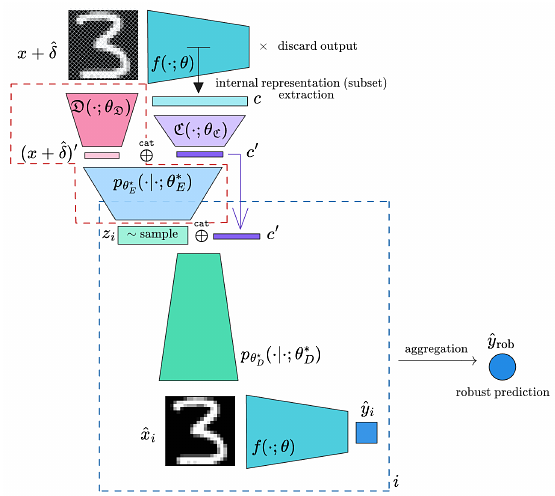

AbstractIn this work, we propose a novel adversarial defence mechanism for image classification - CARSO - blending the paradigms of adversarial training and adversarial purification in a mutually-beneficial, robustness-enhancing way. The method builds upon an adversarially-trained classifier, and learns to map its internal representation associated with a potentially perturbed input onto a distribution of tentative clean reconstructions. Multiple samples from such distribution are classified by the adversarially-trained model itself, and an aggregation of its outputs finally constitutes the robust prediction of interest. Experimental evaluation by a well-established benchmark of varied, strong adaptive attacks, across different image datasets and classifier architectures, shows that CARSO is able to defend itself against foreseen and unforeseen threats, including adaptive end-to-end attacks devised for stochastic defences. Paying a tolerable clean accuracy toll, our method improves by a significant margin the state of the art for CIFAR-10 and CIFAR-100 $\ell_\infty$ robust classification accuracy against AutoAttack. Code and pre-trained models are available at https://github.com/emaballarin/CARSO .