User Attitudes to Content Moderation in Web Search

User Attitudes to Content Moderation in Web Search

Aleksandra Urman, Aniko Hannak, Mykola Makhortykh

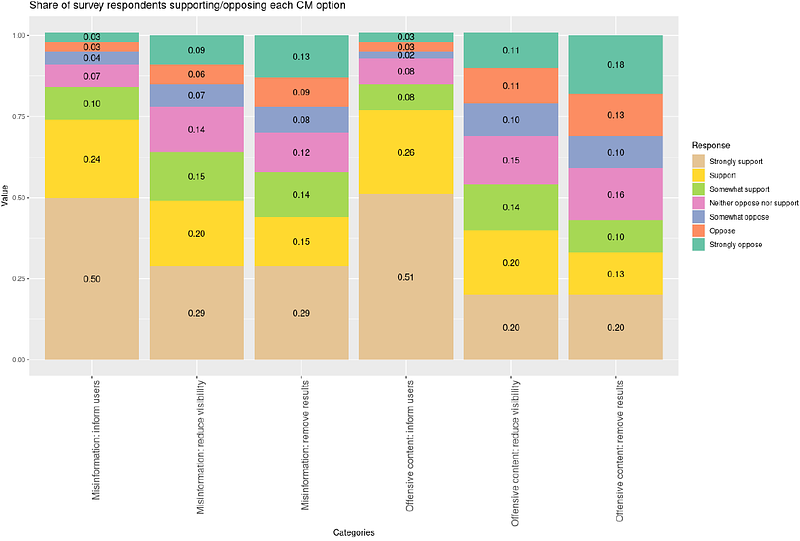

AbstractInternet users highly rely on and trust web search engines, such as Google, to find relevant information online. However, scholars have documented numerous biases and inaccuracies in search outputs. To improve the quality of search results, search engines employ various content moderation practices such as interface elements informing users about potentially dangerous websites and algorithmic mechanisms for downgrading or removing low-quality search results. While the reliance of the public on web search engines and their use of moderation practices is well-established, user attitudes towards these practices have not yet been explored in detail. To address this gap, we first conducted an overview of content moderation practices used by search engines, and then surveyed a representative sample of the US adult population (N=398) to examine the levels of support for different moderation practices applied to potentially misleading and/or potentially offensive content in web search. We also analyzed the relationship between user characteristics and their support for specific moderation practices. We find that the most supported practice is informing users about potentially misleading or offensive content, and the least supported one is the complete removal of search results. More conservative users and users with lower levels of trust in web search results are more likely to be against content moderation in web search.